This article was written in collaboration with academics from Ghent University and is part of our 'Co-thinking about the Future' magazine.

There have always been advocates and opponents of new technologies, but recently the debate has become much fiercer than ever before. The reason for this is that some people believe new developments in robotics and AI could spell the end for humanity.

An intelligent supercomputer

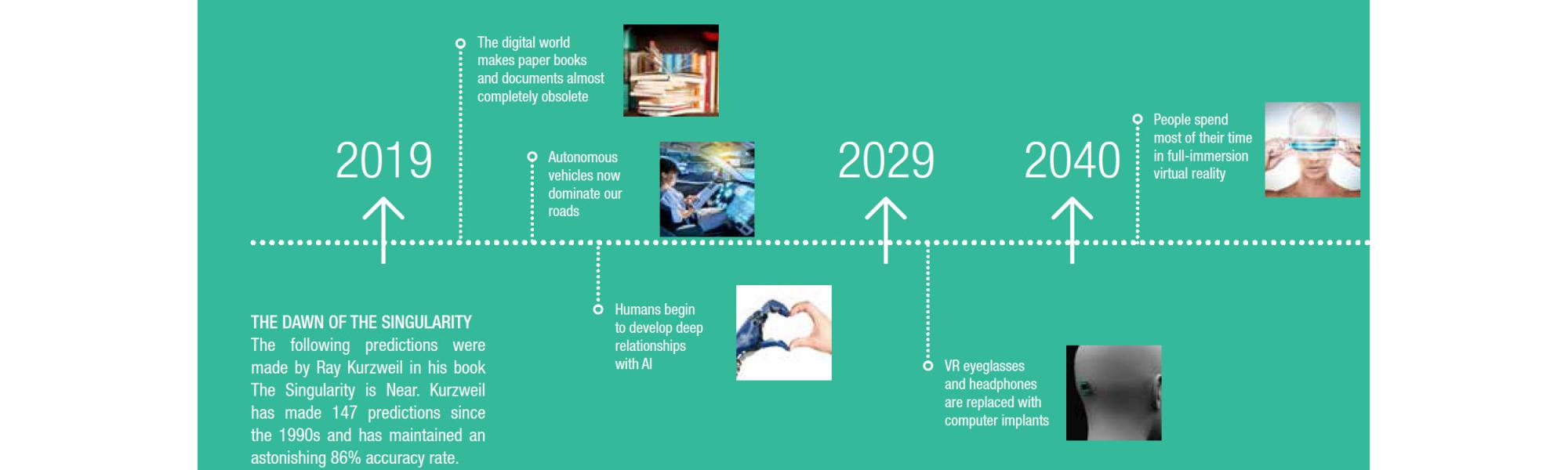

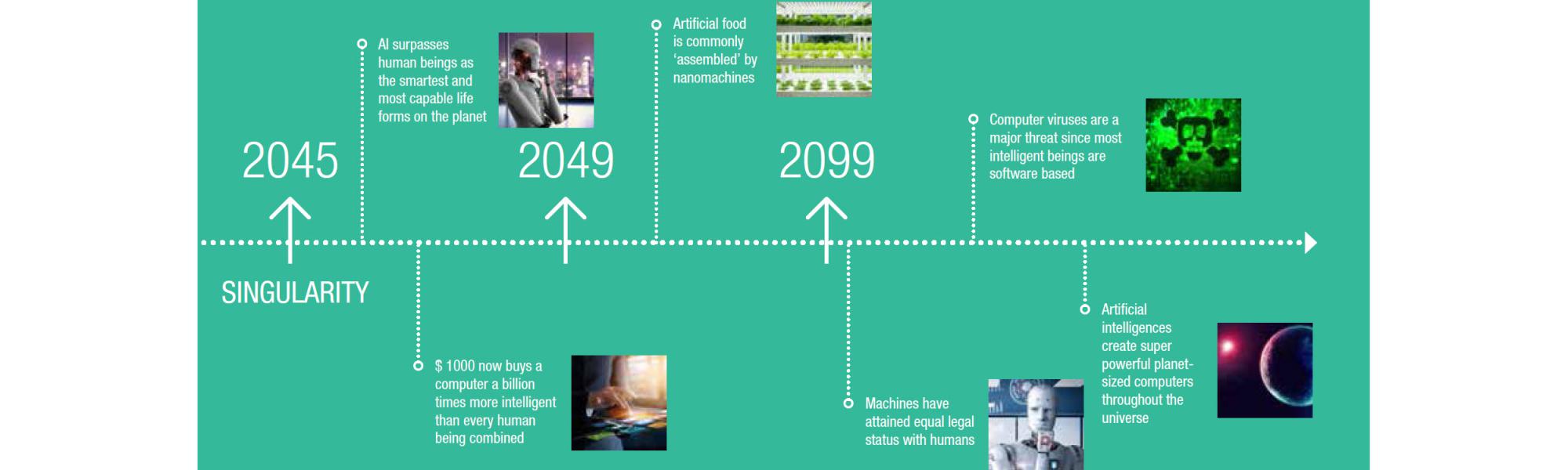

One term that always crops up in these discussions is ‘singularity’. But what does it mean? Thomas Verschueren from Realdolmen explains: ‘Put simply, singularity is the point in time in the future when, with the best will in the world, we cannot make any more meaningful predictions. New developments will be so advanced that they will trigger runaway technological growth, making literally anything possible.’ We’re mainly talking about developments in robotics and computer technology, but there will be more new discoveries and inventions, for example in genetics and nanotechnology, too. Experts believe the crucial aspect will be predominantly in the possibilities of artificial intelligence (AI). Advancements in this field have accelerated dramatically over recent years, leading some people to start dreaming of AI with its own consciousness. ‘Think of a supercomputer with unimaginable processing power and access to pretty much all the possible sources of information in the world,’ explains Verschueren. ‘Add algorithms to this, which enable technology to recognise all these data patterns, perceiving all problems and discovering opportunities, and you have something like Skynet from the Terminator films. Especially if that computer system is connected to a huge number of robots, who act slavishly – because that’s what robots do – to execute

the system’s instructions.’